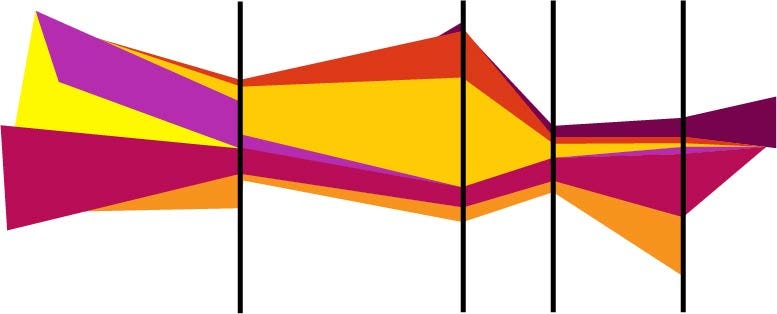

Project teams, Visualized

This article provides a detailed breakdown of the project lifecycle at Yammer, outlining five distinct phases: Design, Build, A/B Test, Analyze, and Cleanup. Each phase sees varying levels of involvement from different functional teams, such as designers, researchers, analysts, engineers, quality engineers, product marketing managers, and product managers. The model emphasizes that each phase is not isolated but involves continuous iteration and feedback loops, highlighting the collaborative nature of project development.

For example, while designers are primarily active during the Design phase, they provide ongoing input throughout the process, particularly during Build and Analyze. Similarly, analysts are crucial for shaping the Design phase but dedicate significant effort during the Analyze phase. This model not only provides insights into Yammer's project management approach but also serves as a useful template for other organizations to model their own project processes, fostering discussion about functional roles and streamlining collaboration.

[

](https://medium.com/we-are-yammer?source=post_page-----fb532952e702--------------------------------)

Project teams are complicated, gnarly beasts. How many people will this feature need, from which functions, and when?

Every company has its own template, its own routine for how their projects play out.These routines fascinate me, so I’ve put some time into modeling the way we run projects at Yammer.

If you find the following a valuable way to understand someone else’s product org, consider taking the time to model your own! I’d love to see other product teams’ routines as well.

The Five Phases of a Yammer Project

Every project is a snowflake. That said, they almost all follow these 5 phases:

- As the project starts we begin with a Design phase.

- When the project is ready for engineers, we hold a kickoff meeting and start to Build. Yes, there is a whole (significant) phase before the “kickoff” happens.

- Once we’re code complete and 🐛s are all 🔨ed, we turn on the A/B Test.

- After a pre-determined period of time (usually 2 weeks), we’ll Analyze the test results.

- Ship the feature or kill it, either way there’s Cleanup to be done.

Along the way there are cycles and iterations. In building, you uncover a crucial edge case… go back to Design. In the test you find something that’s broken… go back to Build. In the analysis you discover mismatching treatment groups… go back to A/B Test, etc.

The Project Model

If you look closely at this model and assume a 2 week testing timeline, you can extrapolate that design is about a month, building takes 4–6 weeks, analysis is roughly 3 weeks and then cleanup never ends.

For some projects that’s pretty close. For others, it’s way off.

In general, this model demonstrates the ever fluctuating levels of effort and involvement from the various functions throughout the whole process.

Let’s dig into them individually, shall we?

As you can probably guess, designers tend to do most of their work in the Design phase. At the beginning, the designer does three things:

- Wrap their brain around the problem and goal

- Iterate on UX flows and wireframes until they pass product review

- Begin working on visuals

Once the project moves into the Build phase, designers are significantly less involved. They consult on threads, join for the weekly meetings, and have final say on UX and UI decisions that come up, but often their work is on a steady decline during Build (with a slight uptick right before code complete).

The Test phase is essentially silent for designers, but they get involved again during Analysis, offering insight and challenging analysts and PMs in their decisions.

Cleanup is also pretty light for designers, but they do spend some time here documenting new UI patterns and sharing back with their functional teams about what they learned.

Onboard: Every project has roughly 1 designer

Their Projects: Designers tend to be on 3–5 projects at a time

In my experience, researchers predominately work with project teams in the Design phase to make sure we’re on the right path. They do this in two main ways:

- As stewards of historical knowledge, they point the team away from user-blindness pitfalls

- When needed, they’ll conduct specific studies, surveys, usability tests or interviews for your project

On average, Researchers at Yammer tend to work on larger scale questions, lighting the way as we move forward with the product strategy overall. They do, however, serve as consultants as questions arise in the Build and Analyze phases.

Onboard: Every project has roughly 1 Researcher

Their Projects: Researchers tend to be on 10–15 projects at a time

Having analysts get involved in the Design phase is such glory. It really is an embarrassment of riches. They vet hypotheses, help guide the most testable MVPs, and do a 💩 ton of digging though data along the way.

Their work slows down a bit during Build phase, where their primary responsibility is ensuring that logging has been identified and instrumented correctly.

Ideally, there’s not much to be done during the A/B Test phase other than check the vital signs of the experiment. Analysts monitor as experiments roll out and are often the first to raise a flag if something looks amiss. Again, ideally they don’t have much to do here.

Shocking to exactly no one, the majority of an analyst’s work happens in the Analyze phase. They pour over results, dig deeper when unexpected things (invariably) turn up.

Not much Cleanup for them after a Ship or Kill call has been made, though they do document and spread the learning around the org.

Onboard: Every project has 1 analyst

Their Projects: Analysts tend to be on 8–10 projects at a time

Not much explaining to do here. Engineers build, yo!

Ok, that oversimplifies a bit. They are a crucial part of the Design phase—pushing back against platform anti-patterns, excessive cost and code complexity.

From tech spec to bug fixes, engineers are responsible for most of the Build phase.

When we move to A/B Test, engineers stay close by for a day or two before starting to roll off (if all went well).

They are usually on another project by the time we Analyze results, but they participate in those discussions with observations and opinions.

No matter how the cookie crumbles, there’s always code Cleanup they come back for in the end. If the test wins, they turn it to 100% and remove the experiment scaffolding from the system. If it lost, they remove all the code from that test.

Onboard: Every project has 1–10 engineers

Their Projects: Engineers are only ever staffed to 1 project at a time!

Another embarrassment of riches is quality engineering (QE). They are only marginally involved during the Design phase, but really roll up their sleeves after the engineering kickoff.

Right at the beginning of the Build phase, QE runs an edge case bash—my absolute favorite kind of product meeting. Using the findings from that meeting, they build the necessary automated testing and prep for the manual testing as well.

QE are the gatekeepers that decide when a feature is ready to move to A/B Test, and when it needs (another!) bug bash. Once the feature is in A/B testing, they monitor along with engineers and analysts and check for regressions.

As with engineers, QE aren’t terribly involved in the Analyze phase, but do have code Cleanup after the Ship/Kill.

Onboard: Every project has 1 or 2 quality engineers

Their Projects: Quality engineers are usually staffed to 3–4 projects at a time.

Obviously, our product marketing managers get involved when we are preparing to announce new features. But they also do a fair amount of messaging and expectation setting before we turn on an A/B test.

Onboard: Every project has a product marketing point person

Their Projects: Product marketers are on… all of the projects. All of them.

Finally, we have product managers. We start defining the problem and goal at the genesis of the Design phase, but bring in designers, researchers, and analysts pretty quickly.

Once the goal, hypothesis, success metrics and general UX have passed product review (and once we have dedicated engineers available), we schedule a kickoff with the entire project team to being the Build phase.

There’s a lot of alignment work at the beginning of Build to ensure everyone is working toward a shared understanding of the goal. As Build progresses, we aren’t terribly involved, but work to keep momentum and morale up while ensuring that nothing falls through the cracks.

There’s almost nothing to do during the A/B Test phase other than resist the urge to peek at the data early.

We jump back in the saddle once the Analyze phase starts and work closely with the analyst to make a ship or kill decision.

Once that decision is finalized, we once again take on the “make sure of XYZ” persona and followup up on all the necessary Cleanup.

Onboard: Every project has only 1 PM—just imagine if there were 2 or 3! OY!

Their Projects: Product managers are on 4–7 projects at a time.

And that’s a wrap!

Some grains of salt as you walk away:

- We don’t A/B test everything, but pretty darn close to it. Our default is to test, but given a compelling enough reason, we won’t.

- I built this model to fit reality, but it is reality as seen through my lens. Ask another PM or engineer with a few more years at Yammer and their model may vary slightly.

If you enjoyed reading though our process here, share some 💚 below, or follow me for more pieces like this.

Also, I encourage you to model your org’s process as well! This exercise was quite illuminating for myself and others on the team—a helpful tool for discussing functional roles across the company.

And if you do create your own model, please let me know! I’d love to see it.